Industry Usecases of Neural Network

In this blog I am starting with my basic definition of Neural Network and how it will work in industry. Neural Network is the topic of deep learning and it will work like a human brain. In this blog and tells you from basic to advance means who it will work basic representation etc etc.……

Table of Contents:

- What’s a neural network?

- Neural network representation

- Forward Propagation

- Backward Propagation

- Why Deep Learning is taking off?

1. What’s a neural network?

It’s a machine learning technique that uses a network of functions to learn the mapping of data in high dimensional space as well as a classifier (or regressor). Neural networks have a layered structure where each layer takes input from the previous layer and transforms it using a non-linear activation function.

2. Representation:

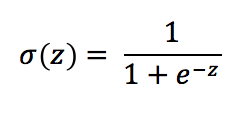

The sigmoid function is defined as:

Let’s call z our linear expression we mentioned before, so

z= wT+b

and therefore, applying the sigmoid function to it we get:

ŷ = σ (z) = σ(wT*X + b)

Great, so we got to the logistic regression model!

To recap, this is what we have defined so far:

We have an input, defined by a set of training examples X with its labels Y, and an output ŷ as the sigmoid of a liner function. (ŷ = σ (wT*X +b)).

We need to learn the parameters w and b so that, on the training set, the output ŷ (the prediction on the training set) will be close to the ground truth labels Y.

3. Forward propagation

We have tried to understand how humans work since time immemorial. In fact, even philosophy is in effect, trying to understand the human thought process. But it was only in recent years that we started making progress on understanding how our brain operates. And this is where conventional computers differ from humans.

For first layer:

a1 = g(z1), a2 = g(z2), a3 = g(z3), where g is the activation function.

If we put the above equations in a matrix form they will look something like this where each row represents one neuron.

where Z is a 3X1 column vector of all z1, z2, z3, W is a 2X3 weight matrix and X is a 2X1 input feature vector.

If we take complete training data(m training examples) in one go, the forward propagation will look like below. Now, Z1, A1, A0 will be 3Xm, 3Xm, 2Xm matrices respectively.

Similarly, for the 2nd layer,

Forward propagation will generate the output for a given set of weights and biases. But, how to choose an optimal set of parameters?

There are inputs to the neuron marked with yellow circles, and the neuron emits an output signal after some computation.

The input layer resembles the dendrites of the neuron and the output signal is the axon. Each input signal is assigned a weight, wi. This weight is multiplied by the input value and the neuron stores the weighted sum of all the input variables.

An activation function is then applied to the weighted sum, which results in the output signal of the neuron.

A popular example of neural networks is the image recognition software which can identify faces and is able to tag the same person in different lighting conditions as well. That being said, let us understand forward propagation in more detail now.

4. Backward propagation:

The main goal of any machine learning algorithm is to minimize error. For this, we can update the learning parameters like weights and biases using gradient descent. This method of updating the parameters for each layer in a neural network is done using back-propagation. It’s called back-propagation because we update the parameters starting from the last layer and moving backward to the first layer.

Let’s understand it for a binary classifier using the loss function of logistic regression

Here, L(yhat, y) is the loss function, and J(w, b) is the cost function. The gradient of loss function wrt Z, W, b and A will be

where all d/dx are partial derivatives.

We will update the weights as follows

where α is the learning rate.

We repeat this process of updating weights multiple times until we reduce the error to the desired threshold.

5. Why Deep Learning is taking off?

As shown in the above plot, the performance of deep learning increases with an increase in the amount of data, whereas traditional machine learning algorithms don’t provide that much boost with an increase in data after a certain point. So, in this era of Big Data, with GPUs, we are able to train deep learning models a lot faster than it was 20 years back. Also, there has been a lot of work done to improve deep learning algorithms to make them run faster.

Thankyou for reading(….

#vimaldaga #righteducation #educationredefine #rightmentor #worldrecordholder #linuxworld #makingindiafutureready #righeducation #arthbylw #neuralnetwork #deeplearning